Most Facebook users tend to support the site's new content rules, but also think Facebook should be more proactive in enforcing them

Facebook has revealed far more detailed rules about what content is and is not allowed to be posted on its website. While Facebook says that the actual changes to what is and is not allowed is minimal, the announcement does mark the first time that the company has released a detailed document about what, exactly, users can and cannot post. Users will continue to be unable to post photos with bare buttocks and (female) nipples, but they will be able to post photos of breastfeeding and breasts with mastectomy scars. Content supporting terrorism will also continue to be banned and the rules preventing users from celebrating criminal acts may have been strengthened.

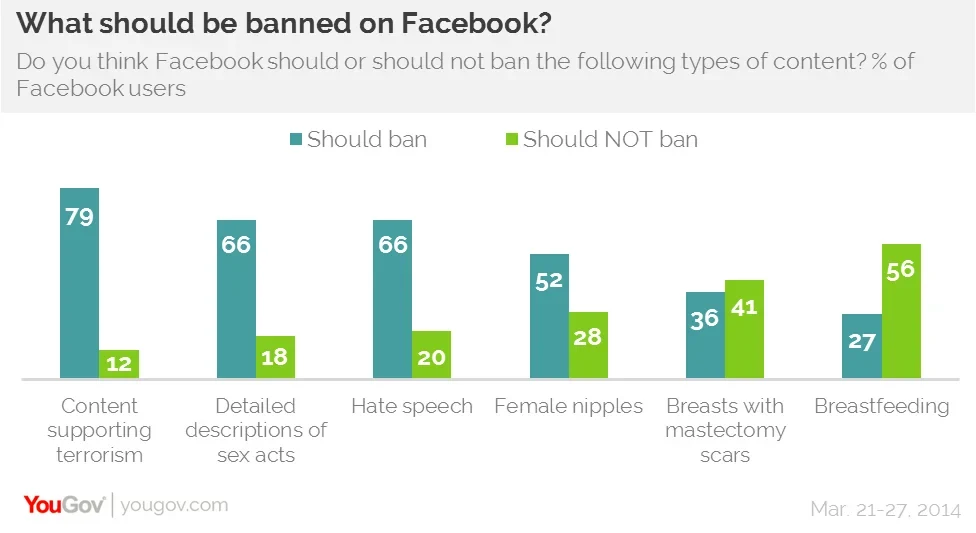

YouGov's latest research shows that most Facebook users agree with almost all of their new rules. The most widely supported rule is the ban on content supporting terrorism, which 79% of American facebook users say should be banned. Majorities of users support banning other types of content, from detailed descriptions of sex acts and hate speech (66%) to banning images with exposed female nipples (52%). 56% agree with Facebook that photos of women breastfeeding should be allowed, but one area where the Facebook using public is not solidly behind Facebook is when it comes to women with scars from mastectomies. 41% of Americans who use Facebook agree that such photos shouldn't be banned, but 36% say that these photos should be banned.

There is something of a gender divide when it comes to certain content. Women are much more in favor of banning female nipples than men, with female support for banning them at 61% and opposition at 20%. Among men, opinion is evenly split with 40% in favor of the female nipple ban and 38% against it. When it comes to breasts with mastectomy scars, however, women back allowing those photos by 43% to 34%, but men are split evenly with 40% supporting a ban and 40% opposed to one.

Only 28% of the site's users think that Facebook generally does a bad job moderating inappropriate content on Facebook. Of the rest, 35% say that Facebook does a good job and 37% aren't sure either way. One point of contention, however, is that half the Facebook using public thinks that the company should actively scan for inappropriate content instead of relying on users to report it. Though Facebook does have some methods of proactively taking down inappropriate content it does generally rely on users to report content that breaks the rules. 35% of American Facebook users are happy with the current system of relying on users to report inappropriate content.

Full poll results can be found here and topline results and margin of error here.